Webamp is an awesome project by Jordan Eldredge, that does an amazing job at recreating Winamp's bit and pieces (some better than others...), but why am I talking about Webamp?

The whole "skin spec" of Winamp is weird and is very telling of the time period it was made in, all of those little parts make Winamp into what it is.

This includes the mini visualizer.

You don't know what I'm talking about? Don't worry, I'll fill you in on the specifics.

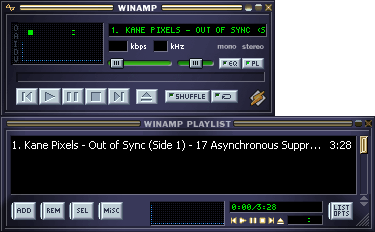

The visualizer I mentioned can be found in Winamp's Main Window and Playlist Editor which varies in size.

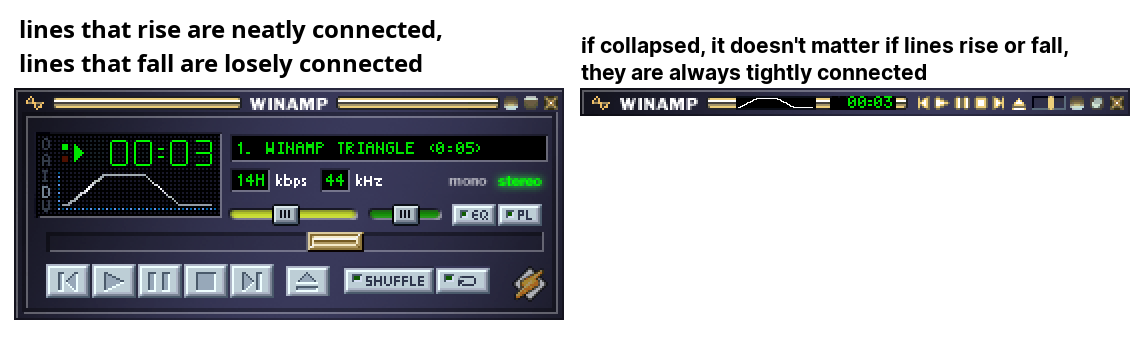

The Main Window features this visualizer in two different places, mainly in the Main Window (duh) and when it's collapsed.

They differ in size though.

In the uncollapsed Main Window it's 76x16 pixels, with a general drawing area of 75x16 (double sized) or 75x14 (normal sized)

Collapsed it has a size of 38x5 pixels (normal sized), for some reason the spectrum analyzer has a total drawing area of 37x5 pixels, a pixel less in width. The Oscilloscope has the same 38x5 pixel drawing area.

Note: For some reason, the oscilloscope is drawn differently in this mode.

In double size mode, the visualizer then has a size of 76x10 pixels (unsubstanciated claim but it can be reasonably assumed so from the Main Window) and a drawing area for both modes of 75x10 pixels.

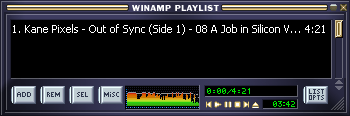

In the Playlist Editor, the visualizer shows up only if you've expanded the window wide enough, and if the Main Window is disabled.

It too has an area that's 72x16 pixels and a general drawing area of 72x14... wait... why is it 72px in width...?

This weird discrepancy in width would later become the standard size for the <vis/> object in wasabi/Winamp3/Modern Skins.

Now that we have that out of the way... how do we draw the Oscilloscope?

Winamp has a few different styles, "Lines", "Solid" and "Dots".

The following code examples are mere recreations of what happens, the code in Winamp is different, though the below examples visually achieve the same effect.

This one's simple, as it's merely plotting pixels of the current y position of the amplitude which is clamped to the pixel height of the canvas, nothing special.

Draws the wavefrom in a filled mode starting from the center, the code looks like this:

if (y >= (8)) {

top = 8;

bottom = y;

} else {

top = y;

bottom = 7;

}

We check if y is equal or above 8, if that is true, we set top to 8 and bottom to y, this allows us to draw the waveform in a filled style, thus making it "solid".

If that is not true, then we set top to y and bottom to 7.

This allows us to draw the waveform from a somewhat center point of the canvas, with both halves filled.

This one's a bit more complicated, it is visually similar to the Bresenham algorithm but differs a lot.

for (int x = 0; x < 75; x++) {

if (x == 0) {

last_y = y;

}

top = y;

bottom = last_y;

last_y = y;

if (bottom < top) {

int temp = bottom;

bottom = top;

top = temp + 1;

}

for (int dy = top; dy <= bottom; dy++) {

drawPixel(x, dy);

}

}

"y" is the value of the amplitude of our audio, and last_y is the frame/tick of where y was.

top is set to y, and bottom is set to last_y, same with last_y (though it is set to the current iteration of y).

If bottom is less than top, the two values are swapped so that top represents the smaller value and bottom represents the larger one. The value of top is then incremented by 1 to ensure that the drawing will cover the correct range of pixels without overlap.

Finally, a loop runs from top to bottom (inclusive), drawing a pixel at every vertical position (dy) for the current x-coordinate. This results in a vertical line being drawn for each frame, connecting the current amplitude (y) with the amplitude of the previous frame (last_y), creating a smooth waveform visualization across the x-axis as it iterates over 75 points.

(had to use ChatGPT for that one, sorry, that whole thing just is too complex to my brain to explain)

Additionally, we set the color of the Oscilloscope to the current y axis of the amplitude, outside of the loop where we plot the pixels:

int color_index = top; Color scope_color = osc_colors[color_index];

This way, we avoid drawing a gradient effect on the lines.

I don't think I really need to explain what the Spectrum Analyzer does, but for brevity...

This one's a bit more simpler and I won't go into the coloring things here.

Winamp does bar chunking in an interesting way, at least I haven't seen it elsewhere.

for (int x = 0; x < 75; x++) {

int i = (i = x & 0xfffffffc);

}

This is from a decompiled winamp.exe, however it works the same.

We perform a binary and on the result of x for every 4th iteration and store it in i.

float uVar12 = (sample[i + 3] + sample[i + 2] + sample[i + 1] + sample[i] / 4);

sadata2[x] = uVar12;

We then add together every 4th iteration of our sample variable and store that into uVar12 (or whatever you wanna name it), we then store that to sadata2's array.

You can clamp sadata2 to 15, so that it ranges from 0..15.

Additionally there's some falloff stuff but I'm sure you can figure that one out yourself. Now comes the interesting part.

if (sapeaks[x] <= (int)(safalloff[x] * 256)) {

sapeaks[x] = safalloff[x] * 256;

sadata3[x] = 3.0f;

}

float intValue2 = sapeaks[x] / 256;

sapeaks[x] -= (int)sadata3[x];

sadata3[x] *= 1.05f;

if (sapeaks[x] <= 0) {

sapeaks[x] = 0;

}

This is the real juicy stuff. We first compare sapeaks[x] to the product of safalloff[x] and 256. If sapeaks[x] is less than or equal to this value, we set sapeaks[x] to the same value and initialize sadata3[x] to 3.0f.

Next, we calculate the float intValue2 as sapeaks[x] divided by 256. Then, we subtract the integer value of sadata3[x] from sapeaks[x], and after that, we multiply sadata3[x] by 1.05 to increase its falloff speed slightly for the next loop iteration.

If after these calculations sapeaks[x] falls below or equals zero, we clamp it back to zero, preventing any negative values. This logic essentially creates a smooth falloff effect, giving the visualizer that nice descending peak behavior. The gradual decrement in sapeaks[x] is what creates the visual of the peak falling until it stabilizes or gets refreshed by a higher value from the sample data.

(that too was explained by ChatGPT, sorry.)

This gives the Analyzer it's unique look that has only existed on Winamp and WACUP... at least until now.

I'm getting to that! Don't worry...

The Web Audio API provides Oscilloscope and FFT data to visualize audio... except that it's not good.

getByteFrequencyData/getFloatFrequencyData returns an unweighted logarithmic (magnitude wise) FFT that probably isn't enough even for generic applications, at best you'd want your result to be weighted for more accurate representation.

getByteTimeDomainData returns the Audio as is, though the center point isn't at 0, but 128, which is okay, though for applications like Webamp you'd have to center it to 0 before you do any further processing.

getFloatTimeDomainData has it centered at 0, though the audio data is so quiet, you're better off using getByteTimeDomainData and recentering it for your own purposes.

I've prepared a demo page that allows you to load your own music to show you the difference between custom rendering and FFT code vs. relying on the Web Audio API.

You can get this modified version of FFTNullsoft here.